If you want to practice data structure and algorithm programs, you can go through Java coding interview questions.

In this post, we will see LRU cache implementation in java.

Problem

Design a Least recently used cache implementation in java. It should have below properties.

bounded size: It should have bounded size to take care of memory limits.

Fast access: As we are designing cache, we should be able to fetch or update entries faster.

Evict least recently used entry: Cache should evict least recently used entry if capacity is reached.

Solution

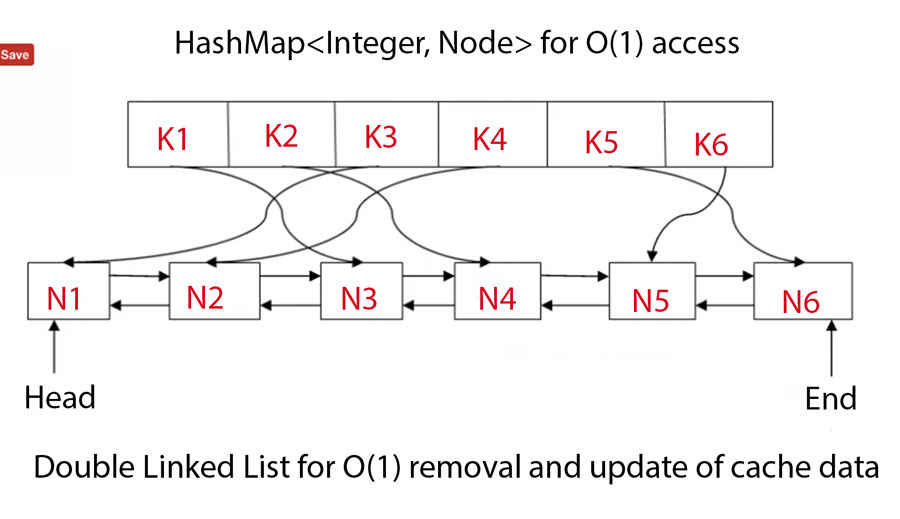

Using HashMap and Doubly linked list

As we need to do lookup in O(1) time then HashMap is obvious choice but we need to take care of least recently used entry as well.

We need to find a data structure which can remove/add in O(1) time if we already know the node. We can use a double linked list for this purpose because it provides removal/addition in O(1) time if already know about the node.

HashMap will make get operation in O(1) time and Doube linked list will make removal/addition in O(1) time.

Here is simple program for LRU cache implementation in java.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 |

package org.arpit.java2blog; import java.util.HashMap; class Node{ int key; int value; Node prev; Node next; public Node(int key, int value){ this.key = key; this.value = value; } } public class LRUCache { int capacity; HashMap<Integer, Node> map = new HashMap<Integer, Node>(); Node head=null; Node end=null; public LRUCache(int capacity) { this.capacity = capacity; } public int get(int key) { if(map.containsKey(key)){ Node n = map.get(key); delete(n); setHead(n); return n.value; } return -1; } /*This method will delete node*/ public void delete(Node node){ if(node.prev!=null){ node.prev.next = node.next; }else{ head = node.next; } if(node.next!=null){ node.next.prev = node.prev; }else{ end = node.prev; } } /*This method will make passed node as head*/ public void setHead(Node node){ node.next = head; node.prev = null; if(head!=null) head.prev = node; head = node; if(end ==null) end = head; } public void set(int key, int value) { if(map.containsKey(key)){ // update the old value Node old = map.get(key); old.value = value; delete(old); setHead(old); }else{ Node newNode = new Node(key, value); if(map.size()>=capacity){ map.remove(end.key); // remove last node delete(end); setHead(newNode); }else{ setHead(newNode); } map.put(key, newNode); } } public static void main(String[] args) throws java.lang.Exception { LRUCache lrucache = new LRUCache(4); lrucache.set(1, 100); lrucache.set(10, 99); lrucache.set(15, 98); lrucache.set(10, 97); lrucache.set(12, 96); lrucache.set(18, 95); lrucache.set(1, 94); System.out.println(lrucache.get(1)); System.out.println(lrucache.get(10)); System.out.println(lrucache.get(15)); } } |

When you run above program, you will get below output:

97

-1

As you can see, in last 4 entries, we do not have 15 as key. That’s the reason we are getting -1 for it.

Using LinkedHashMap

We can use LinkedHashMap to create LRU cache as well. LinkedHashMap has a constructor in which you can specify access order. If you pass accessOrder as true, LinkedHashMap will work on the basis of access order rather than insertion order.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

/** * Constructs an empty <tt>LinkedHashMap</tt> instance with the * specified initial capacity, load factor and ordering mode. * * @param initialCapacity the initial capacity * @param loadFactor the load factor * @param accessOrder the ordering mode - <tt>true</tt> for * access-order, <tt>false</tt> for insertion-order * @throws IllegalArgumentException if the initial capacity is negative * or the load factor is nonpositive */ public LinkedHashMap(int initialCapacity, float loadFactor, boolean accessOrder) { super(initialCapacity, loadFactor); this.accessOrder = accessOrder; } |

We will also override a method named removeEldestEntry() and it will return true in case size() is greater than capacity.

Let’s create a class Named LRUCache

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 |

package org.arpit.java2blog; import java.util.LinkedHashMap; import java.util.Map; class LRUCache { private LinkedHashMap<Integer, Integer> cacheMap; public LRUCache(int capacity) { cacheMap = new LinkedHashMap<Integer, Integer>(capacity, 0.75f, true) { protected boolean removeEldestEntry(Map.Entry eldest) { return size() > capacity; } }; } // This method works in O(1) public int get(int key) { return cacheMap.getOrDefault(key, -1); } // This method works in O(1) public void set(int key, int value) { cacheMap.put(key, value); } } public class LRUUsingLinkedHashMapMain { public static void main(String[] args) { LRUCache lrucache = new LRUCache(4); lrucache.set(1, 100); lrucache.set(10, 99); lrucache.set(15, 98); lrucache.set(10, 97); lrucache.set(12, 96); lrucache.set(18, 95); lrucache.set(1, 94); System.out.println(lrucache.get(1)); System.out.println(lrucache.get(10)); System.out.println(lrucache.get(15)); } } |

When you run above program, you will get output:

97

-1

As you can see, in last 4 entries, we do not have 15 as key. That’s the reason we are getting -1 for it.

That’s all about LRU cache implementation in java.

I am big fan of your blog. It has best interview preparation and concepts learning materials. Please keep adding more design implementations that’s been frequently asked in interviews these days. Thanks for your effort:)

Thank you for the kind words :). I will surely add more content on design implementations.

Thank you for posting. This is one is the best implementations of LRU cache I have seen in java.