Table of Contents

Hadoop is the open-source framework of Apache Software Foundation, which is used to store and process large unstructured datasets in the distributed environment. Data is first distributed among different available clusters then it is processed.

Hadoop biggest strength is that it is scalable in nature means it can work on a single node to thousands of nodes without any problem. Hadoop framework is based on Java programming and it runs applications with the help of MapReduce which is used to perform parallel processing and achieve the entire statistical analysis on large datasets. Distribution of large datasets to different clusters is done on the basis of Apache Hadoop software library using easy programming models.

Organizations are now adopting Hadoop for the purpose of reducing the cost of data storage. It will lead to the analytics at an economical cost which will maximize the business profitability.

For a good Hadoop architectural design, you need to take in considerations – good computing power, storage, and networking.

Hadoop Architecture

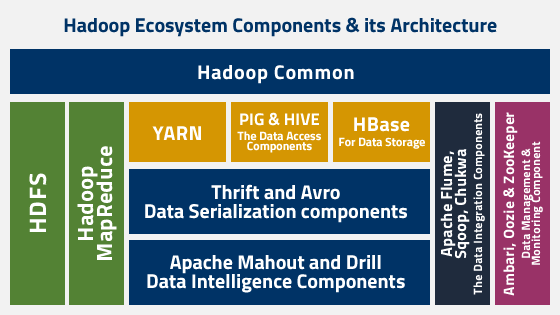

Hadoop ecosystem consists of various components such as Hadoop Distributed File System (HDFS), Hadoop MapReduce, Hadoop Common, HBase, YARN, Pig, Hive, and others. Hadoop components which play a vital role in its architecture are-

A. Hadoop Distributed File System (HDFS)

B. Hadoop MapReduce

Hadoop works on the master/slave architecture for distributed storage and distributed computation. NameNode is the master and the DataNodes are the slaves in the distributed storage. The Job Tracker is the master and the Task Trackers are the slaves in the distributed computation. The slave nodes are those which store the data and perform the complex computations. Every slave node comes with a Task Tracker daemon and a DataNode synchronizes the processes with the Job Tracker and NameNode respectively. In Hadoop architectural setup, the master and slave systems can be implemented in the cloud or on-site premise.

Role of HDFS in Hadoop Architecture

HDFS is used to split files into multiple blocks. Each file is replicated when it is stored in Hadoop cluster. The default size of that block of data is 64 MB but it can be extended up to 256 MB as per the requirement.

HDFS stores the application data and the file system metadata on two different servers. NameNode is used to store the file system metadata while and application data is stored by the DataNode. To ensure the data reliability and availability to the highest point, HDFS replicates the file content many times. NameNode and DataNode communicate with each other by using TCP protocols. Hadoop architecture performance depends upon Hard-drives throughput and the network speed for the data transfer.

NameNode

HDFS namespace is used to store all files in NameNode by Inodes which also contains attributes like permissions, disk space, namespace quota, modification timestamp, and access time. For the entire mapping of the file system into memory, NameNode is used. Two files fsimage and edits are used for tenacity during restarts.

Fsimage contains the Inodes and it contains the metadata definition. The edits file contains the details of modification that have been performed on the fsimage content. If the changes are made then instead of creating a new fsimage it is altered only.

When the NameNode starts, fsimagefile is loaded and the contents of the edits file are applied for recovering the previous state of the file system. With the time, edits file grow in number and consumes all the disk space which results in slowing the process. This problem is solved by the Secondary NameNode which copies the new fsimage and edits the file to the primary NameNode. It also updates the modified fsimage file to fstime file to track when the last time it was updated.

DataNode

DataNode is used to store the blocks of data and retrieve them when it is needed. NameNode is used to get periodical block information reports from the DataNode.

When the system starts up, each DataNode connects with the NameNode and checks if the connection is established by verifying namespace ID and the software version of the DataNode. If any of them not matches with each other, it automatically shuts off. NameNode gets block report from the DataNode as the verification of block replicas. After the registration of the first block, DataNode sends a pulse in every 3 seconds as a confirmation that DataNode is operating properly and block replicas are available to the host.

Role of MapReduce in Hadoop Architecture

MapReduce is a framework used for processing large datasets in a distributed environment. The MapReduce job is based on three operations: map an input data set in different pairs, shuffle the resulting data, and then reduce overall pairs with the same key. The job is the top level unit of MapReduce working and each job contains one or more Map or Reduce tasks.

The execution of a job starts when it is submitted to the Job Tracker of MapReduce which specifies the map, combines, and reduce functions along with the location of input and output data. When the job is received, the job tracker searches the number of splits based on input path and select Task Trackers based on their network vicinity to the data sources.

Task Tracker extracts information from the splits as the processing begins in Map phase. Records are parsed by the “InputFormat” and generate key-value pairs in the memory buffer when Map function is provoked. Combine function is used to sort all the splits from the memory buffer. After the completion of a map task, Task Tracker gives a message to the Job Tracker. Job Tracker then gives a message to selected Task Tracker to start the reduce phase. Now Task Tracker sorts the key-value pairs for each key after reading it. At last reduce function is invoked and all the values are collected into one output file.

Bottom Line

So, the best way for any organization to determine if Hadoop architecture suite their business or not is – by determining the cost of storing and processing data using Hadoop. Compare the determined cost to the cost with default process of data management. If you are an aspirant to build a career in Big Data Hadoop, Best Big Data Online Course/s may help you learn and get certified in Hadoop.

Keep learning to have a bright career ahead!