With the continuous business growth and start-ups flourishing up, the need to store a large amount of data has also increased rapidly. The companies started looking for the tools to analyze this Big Data to uncover market trends, hidden pattern, customer requirements, and other useful business information to help them make effective business decisions and gain profits.

To meet the growing demand, Apache Software Foundation launched Hadoop, a tool to store, analyze, and process Big Data. This article focuses on what exactly the Apache Hadoop is, its framework, how it works, and its significant features.

What is Hadoop?

Hadoop is an open source Java-based framework for big data processing. It is a tool used to store, analyze and process Big Data in the distributed environment. The Hadoop is an open source project of Apache Software Foundation and was originally created by Yahoo in 2006. Since then, this open source project has brought revolution in Big Data analytics and taken over the Big Data market.

In simple terms, Apache Hadoop is a tool used to handle big data. It is used to work on large sets of data distributed over a number of computers using some programming languages. Apache Hadoop is easily scalable and you can scale a number of machines through a single server.

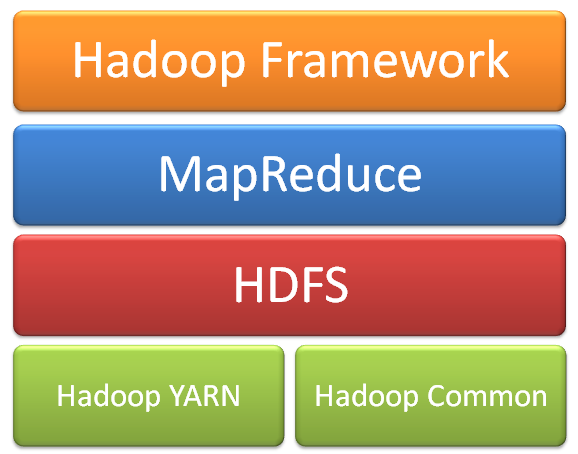

The Apache framework is composed of the following components:

- Hadoop Common: It refers to the common Java utilities and libraries that support Hadoop modules.

- Hadoop Distributed File Systems: It is the primary storage system that Hadoop applications use. It is a distributed file system that enables you to have an access to applications data.

- Hadoop MapReduce: Hadoop MapReduce is a software framework used for the parallel processing of big data.

- Hadoop YARN: YARN is the resource management technology used by Hadoop. It is responsible for resource management and job scheduling

How does Hadoop Work?

The working of Hadoop is a three-stage procedure. Let’s understand how exactly Hadoop works:

Stage 1: The job is submitted to the Hadoop job client for the required process with following details –

- Input and Output file location in the distributed file system

- The Java classes with the implementation of Map and Reduce functions

- Job configuration with the different parameter set

Stage 2: Hadoop job client transfers job along with job configuration to the JobTracker. The JobTracker is then responsible to perform for configuration distribution to slaves, tasks scheduling, and monitoring, submitting status update back to the job client.

Step 3: At different nodes, TaskTrackers then execute the tasks according to MapReduce implementation. The output generated by the Reduce function is stored on the distributed file system in output files.

Features of Apache Hadoop

Businesses have adopted Apache Hadoop because of its startling features. Let us look at the important features of Apache Hadoop.

- Scalability: Apache Hadoop uses distributed processing of local data, this allows the data to be stored, processed, and analyzed at a large scale.

- Reliability: In Apache Hadoop, the data is auto-replicated and hence can generate a redundant copy of data when it comes to system failures. Thus, Apache Hadoop has fault tolerance feature.

- Flexibility: Apache Hadoop does not follow the traditional relational database rules. It can store information and data in any format such as structured, unstructured, and semi-structured.

- Cost-effectiveness: Apache Hadoop is open source and is free of cost. This makes it cost-effective and available for all.

- Compatibility: Apache Hadoop, being a Java-based framework, is compatible with all the platforms.

Final Words

Since its launch, Hadoop has become one of the most popular tools for Big Data processing. At present, a number of tools have come in the market but Apache Hadoop is still the supermodel of Big Data ground.

This importance of Hadoop brings a number of opportunities for Hadoop professionals, and thus, the future of Hadoop skilled professionals is very bright. Hope the information given in this blog has added some more value to your knowledge.

Have any questions? Just mention in the comment section and we will be happy to get back to you